Getting alerts about flaky ECS tasks in Slack

At work, we use Amazon ECS to run some of our Docker-based services. ECS is a container orchestrator, similar to Kubernetes: we tell it what Docker images we want to run in what configuration, and it stops or starts containers to match. If a container stops unexpectedly, ECS starts new containers automatically to replace it.

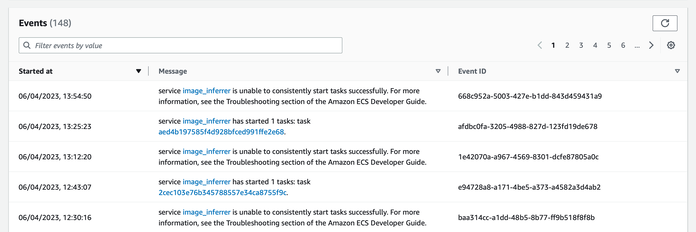

Occasionally, we see “unable to consistently start tasks successfully” messages in the “Deployments and events” tab of the ECS console:

This has always been a mistake that we’ve made – maybe we’ve introduced a bug that crashes the container, we’re trying to launch a non-existent container, or we’ve messed up the ever-fiddly IAM permissions. Whatever it is, we need to investigate.

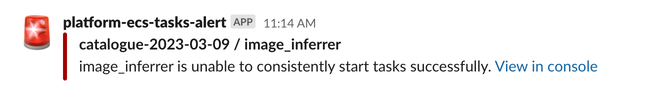

So we know when to investigate, we’ve set up an alerting system that tells us in Slack whenever ECS is struggling to start tasks:

The alert tells us the name of the affected ECS cluster and service, and includes a link to the relevant part of the AWS console. We include clickable links in a lot of our Slack alerts, to make it as easy as possible to start debugging.

This is what it looks like under the hood:

ECS publishes a stream of events to CloudWatch Events, and within CloudWatch we’re filtering for the “unable to consistently start tasks successfully” events. In particular, we’ve got an event pattern that looks for the SERVICE_TASK_START_IMPAIRED event name.

Any matching events trigger a Lambda function, which extracts the key information from the event, gets a Slack webhook URL from Secrets Manager, then posts a message to Slack. This is a standard pattern for alerting Lambdas that we’ve used multiple times.

If you’re interested, all the code for this setup is publicly available under an MIT licence. Both the Terraform definitions and Lambda source code are in our platform-infrastructure repo.

And now if you’ll excuse me, there’s an ECS task that needs my attention…